What Allows Data: The Complete Picture, Past The 4 V's For firms too small to manage their own information centers, "colos" use a cost effective method to stay in the Big Information video game. While data facilities are getting rid of over $30 billion today, revenue is forecasted to strike $136.65 billion by 2028. Our information assimilation remedies automate the procedure of accessing and integrating details from legacy settings to next-generation systems, to prepare it for evaluation using contemporary

View website tools. Schools, colleges, universities, and other universities have a lot of data readily available regarding the pupils, professors, and team. It provides an on-line analytical processing engine made to support exceptionally huge information collections. Because Kylin is built on top of other Apache technologies-- including Hadoop, Hive, Parquet and Spark-- it can easily scale to handle those large data loads, according to its backers. Another open resource innovation preserved by Apache, it's utilized to handle the consumption and storage space of big analytics information sets on Hadoop-compatible data systems, including HDFS and cloud object storage space solutions. Hive is SQL-based data warehouse framework software program for analysis, writing and handling big information embed in dispersed storage space atmospheres. It was produced by Facebook yet then open sourced to Apache, which remains to establish and preserve the modern technology. Databricks Inc., a software vendor established by the makers of the Spark processing engine, created Delta Lake and after that open sourced the Spark-based technology in 2019 with the Linux Foundation.

Difficulties Related To Large Data

Using artificial intelligence, they then honed their algorithms for future patterns to predict the number of upcoming admissions for various days and times. But data with no analysis is rarely worth a lot, and this is the other component of the large information process. This analysis is described as data mining, and it undertakings to search for patterns and anomalies within these big datasets.

The repository segment will grow at 19.2% yearly in between 2020 and 2025.Integration of modern technologies with large data is helping companies make complicated data much more useful and available with graph and to increase their visualization capacities.Working with enhancing wellness and education and learning, reducing inequality, and stimulating financial growth?In 2020, company costs on data centers and cloud facilities solutions, such as the most up to date databases, storage, and networking options, got to the $129.5 billion mark.

http://waylonnsia229.fotosdefrases.com/data-scratching-vs-data-creeping-the-distinctions The Big Information sector has numerous aspects, from information facilities and cloud services to IoT tools and anticipating evaluation devices. However what does the market look like from a raw numbers point of view? Organizations throughout every sector want to use that information to accumulate customer info, track inventory, handle human resources, and more. In fact, we create data at such a worrying rate that we have actually needed to design new words like zettabyte to determine it. There's a wonderful remedy for organizations who intend to succeed and the solution is called huge information. There are several things you need to understand the relevance of information. 50% of US executives and 39% of European execs said spending plan restrictions were the key difficulty subsequently Big Information into a profitable business possession. Rounding out the top 5 challenges were information safety issues, integration challenges, lack of technical proficiency, and spreading of information silos. It is a challenge for insurance companies to increase organizational agility in the face of quickly advancing company problems and an altering regulative atmosphere. You view several video clips and go to several sites and blog sites on your computer system or smart device each day, and each of these activities includes more information on your profile in someone's data source. This information will certainly be after that used to target you for ads or political projects or just anticipate what individuals like you would certainly perform in the future.

Vital Market Gamers

There were 79 zettabytes of information produced worldwide in 2021. For questions connected to this message please get in touch with our support group and provide the reference ID below. For example, Facebook gathers around 63 unique items of data for API.

Inside The Data Transformation Cement Mixer - Forbes

Inside The Data Transformation Cement Mixer.

Posted: Tue, 17 Oct 2023 06:01:14 GMT [source]

In 2020, corporate costs on data facilities and cloud facilities services, such as the most recent data sources, storage, and networking remedies, reached the $129.5 billion mark.

Visit this link Distinguished organizations, collecting large data, utilize this info to gauge client choices and to enhance their product or services. Business are getting different kinds of understandings from the big information collection. In this funded short article, Rohit Choudhary, founder and CEO of Acceldata, breaks down four usual misconceptions and mistaken beliefs around observability. In today's economic environment, numerous firms are tightening their belts.

What Does A Big Data Life Cycle Resemble?

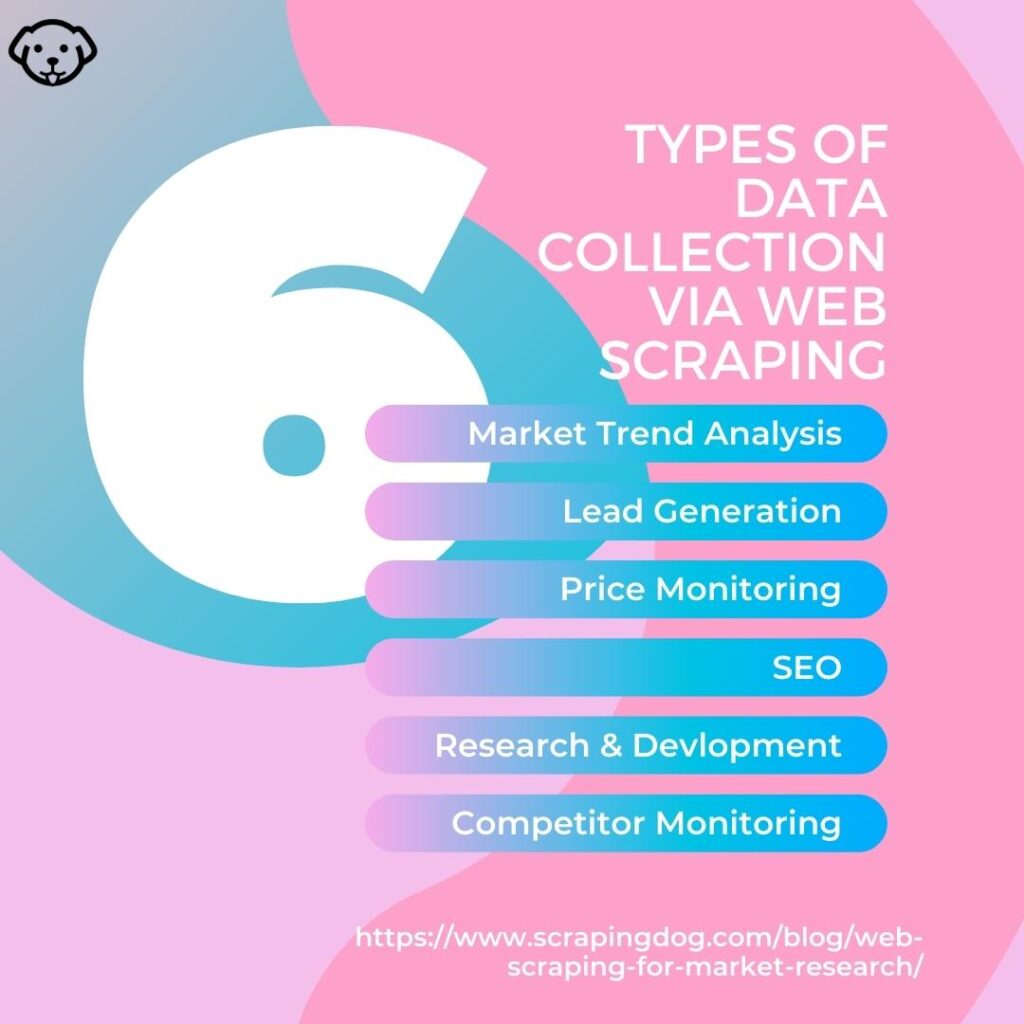

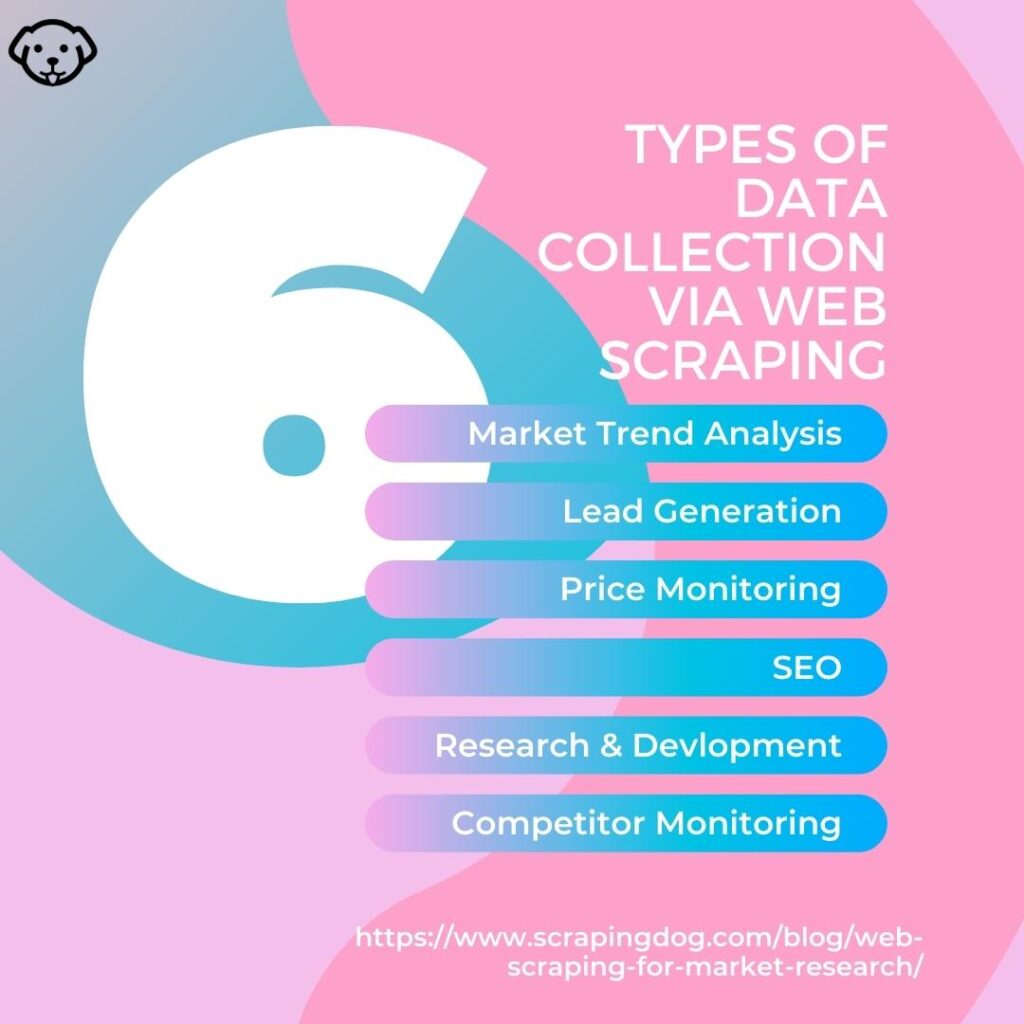

Infographics, turning up everywhere nowadays, are a terrific way to clear up the complicated. Infographics are generally carefully crafted in a poster or presentation to share meaning, yet they disappoint providing actual time details as they're often taken care of in time. Control panels can be an useful tool, but they're so typically improperly created.